2020 LINE FRESH Hackerthon - LINE Label

LINE Label is an award winning project which aim for improving the process of collecting AI training data. We build a crowedsource platform which can use the trivial time each mobile users has to help labeling data for artificial intelligence.

LINE Label project wins the Special Jury Awards in 2020 LINE FRESH Hackerthon and the system has been adapted into NTU Business Intelligence Lab's annotation system.

Source Code & Detail Implementation

The LINE Label demo application and chatbot is temporary down due to the policy change of Heroku. But the implementing detail can still be find in below repo.

Motivation

Taiwan now has many SME(Small and Medium Enterprise) are active in developing or searching for AI solutions to enhance their work. But tend to suffer from the scarcity of data. And the data annotation is not a long-term need for the company. We believe crowdsourcing can solve this problem. With so many mobile users and limitless trivial time they have, it can be huge productivity if they contribute to data labeling.

With LINE ecosystem, we can easily develop a service for mobile users to help local businesses labeling the data they needed. And this project is a showcase of how we can do it in the LINE LIFF and Chatbot application.

Showcase

We embrace the power of LINE ecosystem and use the LIFF (LINE Frontend Framework) to develop the application. So that every LINE user can has direct access to the APP without the need of create a new account.

To better distinguish the quality of each annotator, we design the rating system on each user so that the company that wish to have data annotated on the platform can have good indication on whether to choose this user as annotator or not. Each user will also have level system on specific mission. Like one user might proficient on labeling classification task so he/she will gain higher score on classification mission. As a user gain more credit on different types of mission, he/she can unlock more types of annotation tasks. And more job opportunity for the user. Just like the gaming system, you can level up for more rewards.

At the mission board, user will see all the tasks he/she currently available of. The task owner (i.e. the company user) can set filter on thier target annotator. They can set higher standard on users' level or rating to achieve better annotation quality, but it will also reflect on the price charge.

Classification Task

The classification task's annotation interface would looked like this. It will shows serveral picture for user to choose the pics that best describe the subject description.

Also note that we will randomly insert some validation data into the annotation process. The purpose of that is because we also want to monitor the potential accuracy of this batch of data. By doing this, we can filter out some unprofessional annotator that has lower accuracy score. We will show the user how well they perform after reach certain level of annotation. We will use the accuracy score to reward certain experience point to user.

Video Demo of Classificaiton Task

NER (Name Entity Recognition) Task

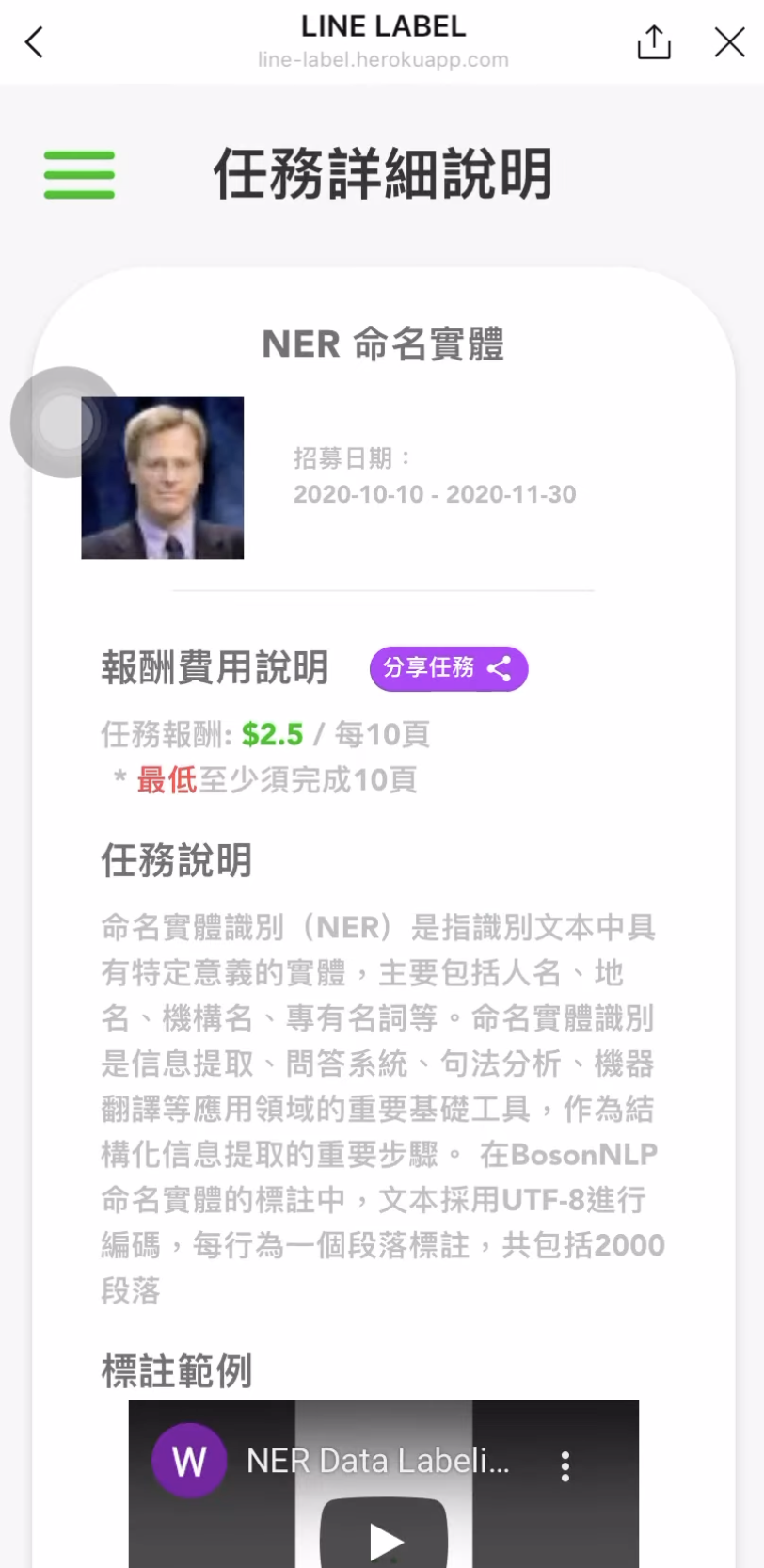

Before starting each task, the mission owner will provide misson description and statement for annotator. Examples image and tutorial video will also be provided to help them achieve better annotation quality. On the mission description page, the reward price of annotation service will also be provided for user to decide whether to take this task.

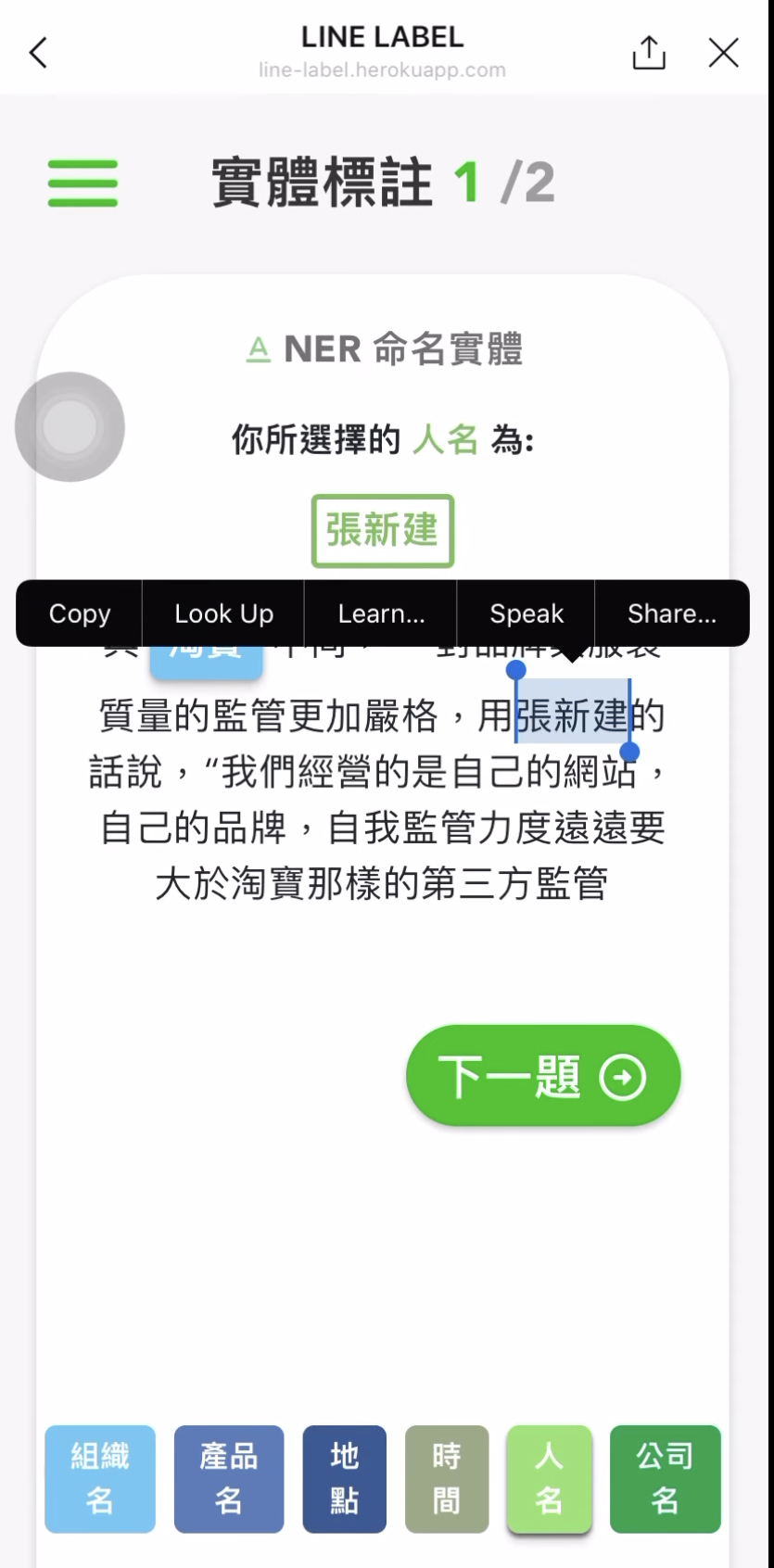

In NER task, the objective is to identify name entity into different type. Users can first select which entity they aim to annotation by clicking the button at bottom.

Users can use long-press&drag (highlight) action on screen to label their target entity within paragraph. In each paragraph there might be several entity to be recognized.

Video Demo of NER Task